AI Ready Embedded Boards for Real-Time Edge Intelligence

How Well Does Your Hardware Handle AI Workloads?

Not all boards are built for edge AI. This table compares standard embedded boards with customized AI-ready solutions—highlighting how precision I/O, inference capabilities, and field durability make all the difference when deploying real-world applications.

| Question | Standard Embedded Board | Customized AI Ready Board | Exclusive ODM by MiniITXBoard.com |

|---|---|---|---|

| Is it optimized for vision input? | Generic USB, CPU-bound | Synchronized CSI/MIPI lanes | Multi-camera sync w/ hardware triggers |

| Can it run real-time inference? | Needs cloud or external GPU | Integrated NPU or VPU | Tuned Jetson/TPU edge compute with BSP pinning |

| Will it survive rugged AI sites? | Fans + PSU dependent | -20°C to +70°C, fanless capable | IP-rated, sealed BOMs for field AI |

| Can it run on lightweight frameworks? | Linux only, no AI stack support | Supports TensorFlow Lite / ROS2 | Preloaded with JetPack, PyTorch Mobile, Yocto |

Custom AI Boards for Every Application Class

From low-power smart cameras to GPU-intensive automation, these categories help technical buyers align hardware with AI complexity, power budgets, and thermal envelopes.

Ultra-Compact Vision AI

Compact AI for Tight Spaces and Low Power Budgets

Built for smart kiosks, edge analytics, and entry-level inference

Sub-10W fanless compute with onboard NPU or Coral TPU

Ideal when board space and thermal limits are critical

Use it when:

- You need real-time vision under 10W

- Always-on is required, but not cloud-connected

- Your form factor is constrained (robotic grippers, drones)

Balanced Inference Gateways

General-Purpose AI for Mobile and Modular Platforms

Compute power from 20–60 TOPS

Balanced support for AI, sensor input, and networking

Ideal for AGVs, inspection robots, industrial gateways

Use it when:

- Real-time multi-stream AI is needed (e.g. factories, AGVs)

- You’re combining AI, sensors, and networking

- Long lifecycle and industrial temperature range are required

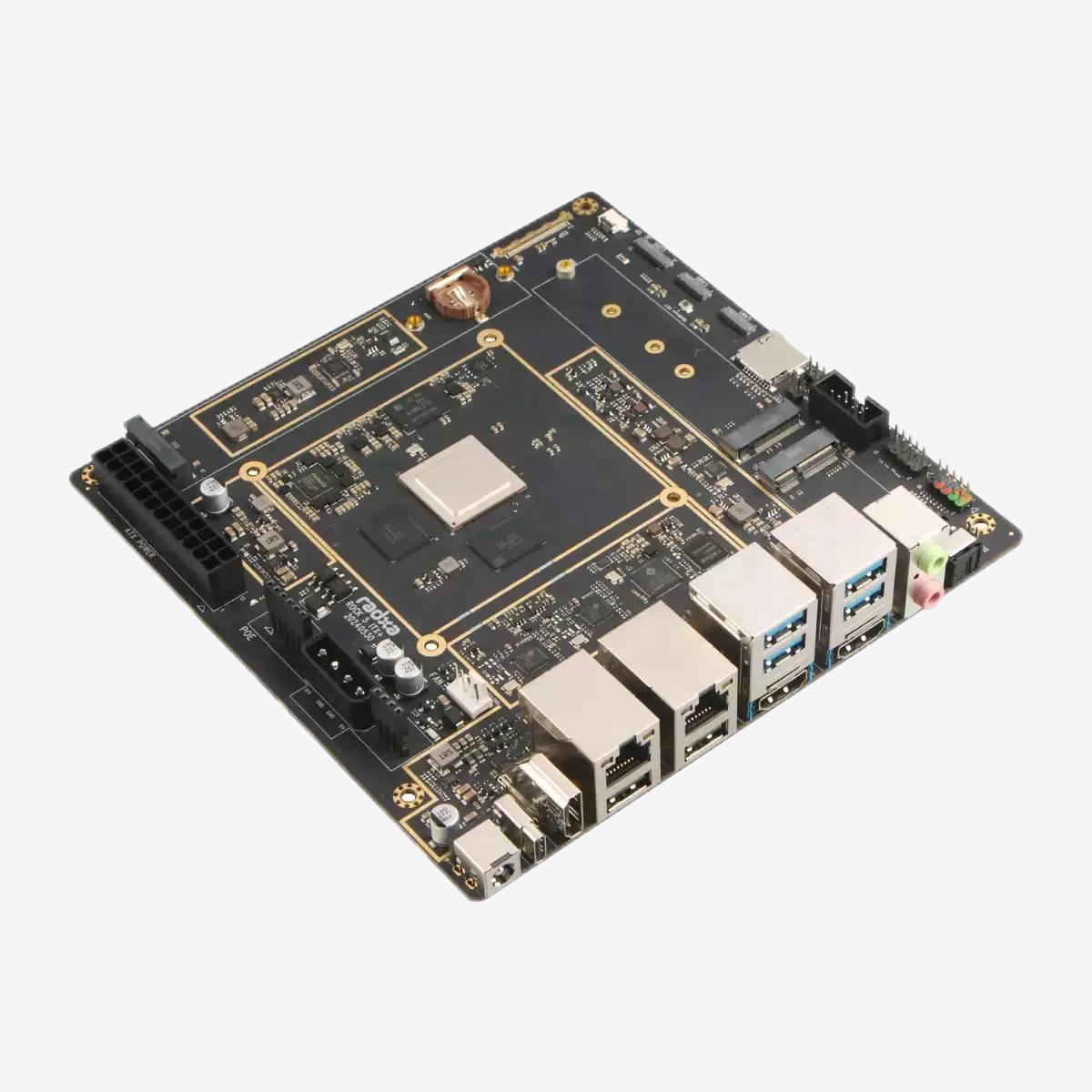

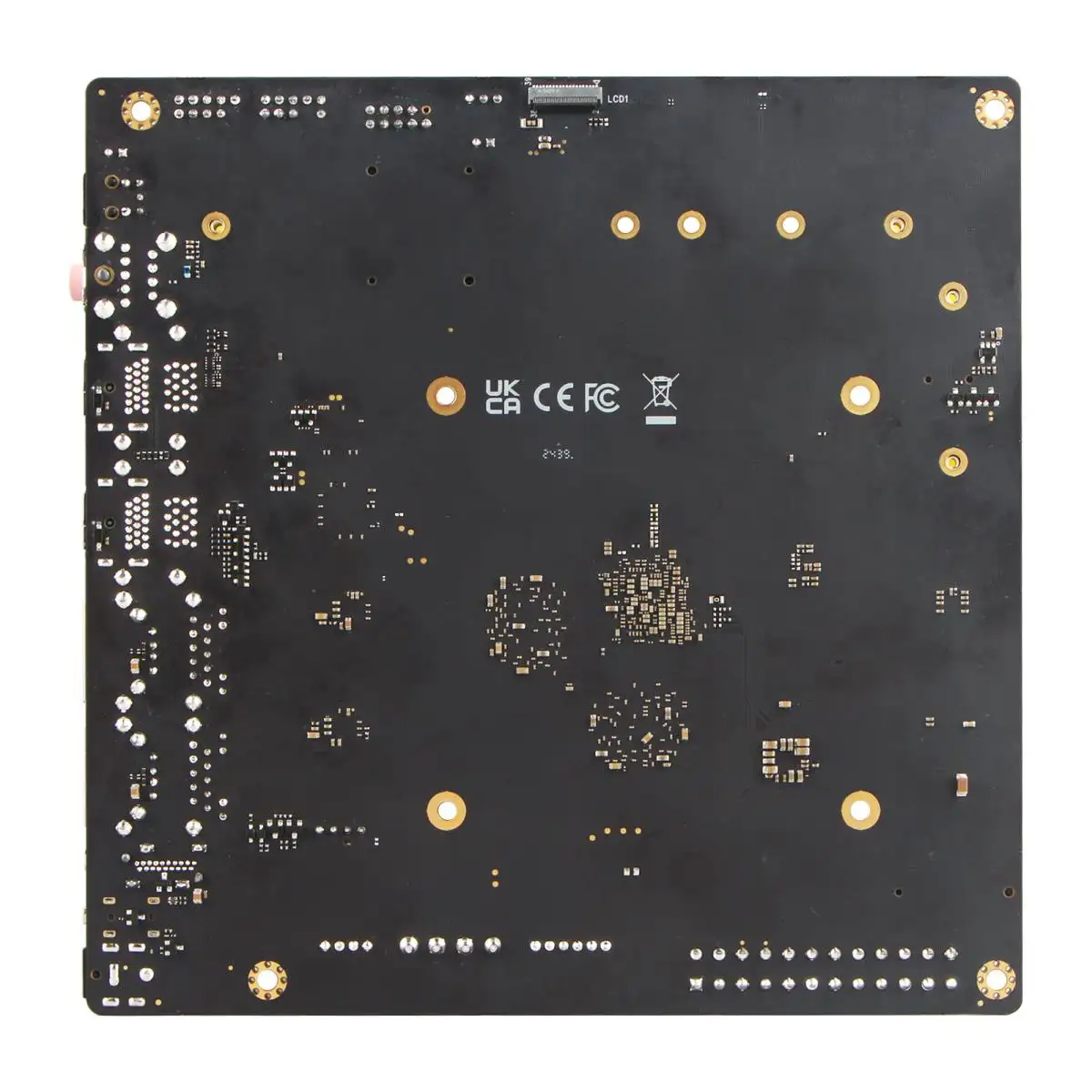

High-Performance Embedded AI

AI-Intensive, Safety-Critical, or Regulated Environments

Built with ECC memory, NPU+GPU acceleration

Designed for smart hospitals, security nodes, defense

24/7 compute with thermal safeguards and power failover

Use it when

- You run multiple neural nets in parallel

- AI outputs are mission-critical and safety-linked

- Compliance (e.g. ISO 26262) is a requirement

Build with Confidence on a Custom AI-Ready Platform

Your AI deployment isn’t generic — and neither is your hardware. Whether you’re building multi-camera edge vision, autonomous robotics, or real-time analytics nodes, we’ll help you design an AI Ready board that matches your needs. From power and thermal layout to inference modules and lifecycle lock-in — we optimize for success in the field.

- Supports NPU/GPU/TPU modules (Jetson, Coral, Movidius, etc.)

- 24/7 AI inference capability with thermal & voltage safeguards

- Locked BOM and firmware for production consistency

Precision Interfaces Built for AI Workloads

AI inference doesn’t run in isolation—it relies on fast, synchronized, and intelligently mapped I/O. This section breaks down how AI Ready platforms are customized across sensor input, compute routing, and deployment durability, ensuring performance matches application needs.

| Interface Category | Compact Vision AI Boards | Mid-Tier AI Gateways | Advanced AI-Embedded Systems |

|---|---|---|---|

| Neural Capture Interface | CSI-2 x2 with onboard sync for stereo camera vision | CSI-2 x6, programmable timing matrix for multiple sensor inputs | CSI-2 x8 with AI-timed trigger queue for simultaneous capture |

| Inference Compute Path | USB/NPU combo slot for real-time model calls under 10W | PCIe Gen3 lanes + local TensorRT support | Dual PCIe Gen4 + GPU integration for multi-threaded AI workloads |

| AI Startup Logic | eMMC-based neural net boot flow | NVMe boot with JetPack pre-integrated | Dual-boot redundancy with watchdog support for AI firmware updates |

| Sensor Control & Sync | 4x GPIO with soft interrupts | DMA-enabled GPIO + I²C block triggers | Sync-ready GPIO with deterministic response latency |

| Display & Edge HMI | HDMI 2.0 or LVDS for status output | HDMI + dual-channel eDP for AI dashboards | HDR-capable DSI for neural feedback loops in industrial HMI |

| AI Accelerator Expansion | M.2-E or USB slot for lightweight NPU modules | Dual M.2 for SSD + Wi-Fi/NPU hybrid | PCIe x4 for GPU, VPU, or AI ASIC cards |

| Voice/Audio AI Interface | I2S mono codec for keyword detection | Dual I2S for stereo audio ML inference | DSP audio processor with smart voice preprocessing |

| Power Resilience Layer | 5–12V input with undervoltage lockout | 9–24V support, EMC hardened | 9–36V tolerant, transient-proof, hot-swap ready |

Thermal & Environmental Engineering

Thermal Resilience & Power Strategy for AI-Centric Edge Boards

AI acceleration isn’t just about compute power—it’s about keeping systems cool and stable when pushing models 24/7 in edge environments. AI Ready boards are engineered for controlled thermals, tuned airflow, and shock-hardened power input to ensure non-stop inference even in unpredictable real-world deployments.

Zoned Cooling for AI Modules

Smart zoning across GPU/NPU hotspots and memory areas enables focused cooling without overuse of fans or ducting.

Passive + Active Hybrid Design

Boards support silent-mode startup with heatpipe arrays, scaling up fans only when inference bursts require it.

Predictive AI Throttling

Onboard thermal controllers react to model load types, not just temperature thresholds—ensuring smooth transitions under real-time pressure.

Wide-Range Input with Watchdog Reset

From mobile robots to remote kiosks, all boards support 9–36V DC input with transient filtering and timed reset logic.

Tested Against Field Failures

Systems are validated for -20°C to +70°C continuous operation and tolerate vibration, EMI, and input fluctuations without downtime.

Lifecycle Resilience for AI-First Deployments

In edge AI, model performance is only as reliable as the board’s stability over time. AI Ready boards are built with lifecycle foresight—from frozen firmware stacks to pre-qualified inference modules—to reduce design risk and ensure consistent AI behavior through every deployment phase.

Locked BOMs for ML Integrity

No last-minute hardware surprises. Every core component (VRMs, TPUs, memory ICs) is version-controlled and BOM-frozen for reproducibility.

Validated Edge AI SoC Roadmaps

Boards align with 8+ year support windows for platforms like Jetson, Coral, and EdgeTPU, ensuring long-term field viability.

Driver & AI Stack Pinning

Pre-qualified SDKs, Tensor runtime versions, and kernel configs—locked at the factory to prevent drift during updates.

Real-World Functions Enabled by AI Ready Mini-ITX Boards

From factory vision nodes to mobile robotics and AI-powered kiosks, AI Ready platforms don’t just support inference—they are engineered to deploy it directly at the edge. The following showcases how our AI Ready boards enable low-latency, power-aware, and sensor-integrated computing in critical real-time environments.

Onboard Vision Processing

Run object classification, anomaly detection, and live tracking locally—on systems under 15W—without needing cloud roundtrips.

Offline AI Decision-Making

Deploy YOLO, TensorFlow Lite, or ONNX models right on the board to support speech, gestures, and safety zones—no internet required.

Sensor-Driven AI Loops

Seamlessly integrate camera, LiDAR, and IMU inputs with GPIO-triggered ML tasks—perfect for factory automation, warehouse bots, and mobile platforms.

AI in Low-Power, Fanless Environments

Boards support full edge inference under thermally constrained designs. Great for sealed kiosks, mobile carts, and battery-powered field gear.

Explore Engineering Insights for AI-Ready Embedded Systems

Stay informed with practical design knowledge built around real-world AI deployment at the edge. Our editorial content dives into selecting NPUs, optimizing vision pipelines, tuning I/O for ML sensors, and managing lifecycle-critical AI boards. Whether you’re developing robotic control systems, autonomous inspection units, or compact vision terminals, our blog equips technical buyers and engineering teams with the guidance they need to build AI-ready systems that perform under pressure.

Intel Celeron N150: Balancing Power, Performance, and Practical Efficiency in Compact Systems

Table of Contents 1. Introduction: The Role of the N150 in Modern Embedded Platforms 2. CPU Microarchitecture and Platform Integration…

Intel Celeron N300: Engineering Low-Power Performance for Modern Embedded Systems

Table of Contents Introduction: The N300’s Place in Embedded and SFF Markets Architecture & SoC Integration Power Consumption & Idle…